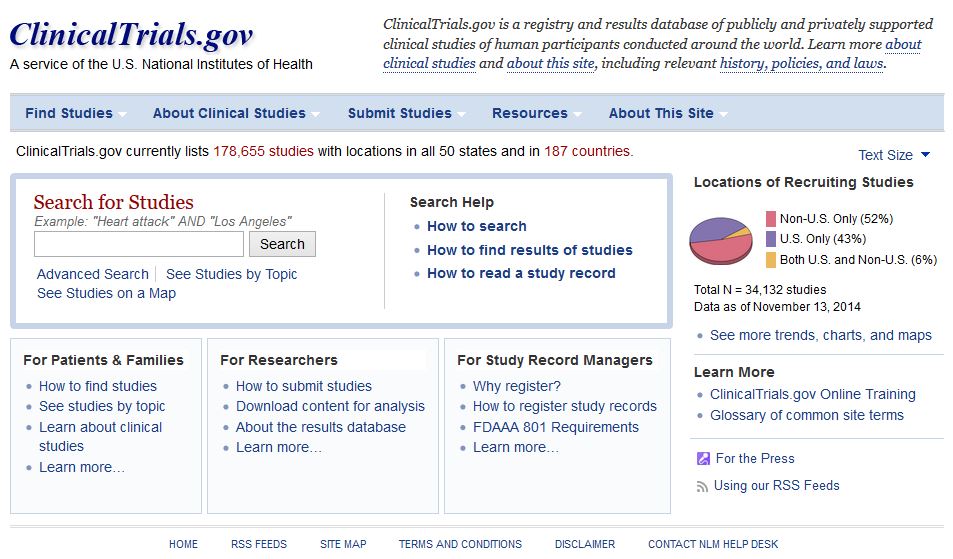

Why oh why does the National Library for Medicine remove investigator contact details from clinicaltrials.gov after completion of a trial. We need to contact them to ask why their trial is not published!

BMJ letter from us on the subject:

For successful translation of results from research into practice there must also be timely dissemination of research findings (1). The Food and Drug Administration Amendments Act requires trials that are subject to mandatory reporting to post results within 12 months of study completion on ClinicalTrials.gov (2). Despite this initiative, less than a quarter of trial investigators comply (3).

Maruani and colleagues report that email reminders of the legal requirement to post results significantly improve reporting at six months (4). Any intervention that increases dissemination of clinical trial results is welcome and the authors should be commended for their efforts.

However, we do not understand the authors’ described methods. They report that the cohort included trials “that had available contact details (email addresses) of responsible parties”, and go on to state that they “extracted the email addresses of responsible parties from ClinicalTrials.gov”. In the discussion they highlight “the need for updating email addresses of responsible parties in ClinicalTrials.gov”.

We would be interested to know how this is possible as all email addresses for completed trials are removed from ClinicalTrials.gov as a matter of policy by the National Library of Medicine (Table 1).

We asked the National Library of Medicine (NLM) to comment on this. In addition, we asked what advice they would give a patient who had taken part in a completed clinical trial, and wished to contact the investigators to enquire about trial results. They responded:

“If the record is closed or completed, we remove all contact information in the location and contact section since there is no reason why a potential patient would need to contact them.”The NLM will not provide contact email addresses on request, despite these previously being available on ClinicalTrials.gov while the trial was recruiting.

The removal of previously published contact information from ClinicalTrials.gov has important implications for transparency in trial reporting. Interventions, such as that proposed by Maruani, cannot be delivered at scale while this practice exists. Searching for contact details manually with Google or Pubmed is difficult at best and impossible for many, who may include patients that have participated in a study and wish to contact the investigators about trial results.

References

1. Ross JS, Tse T, Zarin DA, Xu H, Zhou L, Krumholz HM. Publication of NIH funded trials registered in ClinicalTrials.gov: cross sectional analysis. Bmj 2012;344: d7292.

2. Zarin DA, Tse T, Williams RJ, Califf RM, Ide NC. The ClinicalTrials.gov results database–update and key issues. N Engl J Med 2011;364(9): 852-860.

3. Prayle AP, Hurley MN, Smyth AR. Compliance with mandatory reporting of clinical trial results on ClinicalTrials.gov: cross sectional study. Bmj 2012;344: d7373.

4. Maruani A, Boutron I, Baron G, Ravaud P. Impact of sending email reminders of the legal requirement for posting results on ClinicalTrials.gov: cohort embedded pragmatic randomized controlled trial. Bmj 2014;349: g5579.